Team: Mackenzie Cumings and Anusha Ramesh.

Key Result(s): TCP Incast collapse should be observed with increase in number of servers taking part in a “barrier synchronized” transaction for the default TCP minimum retransmission timeout value of 200ms. But we haven’t been able to reproduce the key result of the project and obtained only false positives which mininet presented at high bandwidth like 1 Gbps. Later when we switched to lower bandwidth values, we could not actually get to visualize incast collapse. We weren’t able to see lots of retransmissions happening at lower bandwidth values which is necessary for incast collapse. We realized that Goodput decrease is not a sufficient indicator of incast collapse.

Source(s):

Vijay Vasudevan et al., Safe and Effective Fine-grained TCP Retransmissions for Datacenter Communication, SIGCOMM 2009

Click to access incast-sigcomm2009.pdf

Contacts:

Mackenzie Cumings- mcumings@stanford.edu, Anusha Ramesh- aramesh2@stanford.edu

Introduction:

This project centers on TCP data transfers in data centers. The concept we chose to reproduce is TCP incast collapse as presented in the Paper “Safe and Effective Fine-grained TCP Retransmissions forDatacenter Communication”.

The main conditions for incast collapse are as follows :

1) High Bandwidth, low latency and small switch buffers

2) Clients issue “barrier synchronized requests “in parallel. This means that the client splits the request for data into equal chunks and sends them to multiple servers. The client does not proceed further until it has received the data chunks from all the servers.

3) Since the data is split into small chunks across servers, we can find that servers return small amount of data per request.

This type of workload can result in overflow of the switch buffers resulting in TCP timeouts. Say, one server involved in the transaction experiences a timeout while rest of the servers finish sending their response. The client has to wait for the default TCP timeout of 200ms before it can receive a response from the server which experienced the timeout. The client will not proceed further in this case and the client link will be underutilized leading to loss of goodput i.e. throughput of the application.

An important result of the paper centers around retransmission timeout. It is seen that for the datacenter environments where latency is of the order of microseconds, retransmission timeouts should be of the same order as network latency. Having high values for RTOmin can result in issues in the above mentioned environments.

In our project we concentrated on reproducing the results of the paper which a) show the occurrence of incast collapse and b) variation ofgoodput with various values of rto_min.We have tried to replicate the scenario mentioned in the paper through amininet topology by writing our own client and server applications. In order to set RTO_min in the order of milliseconds, we have used the current Linux commands.

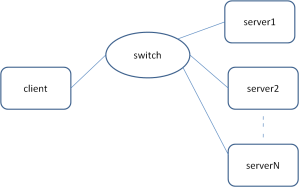

Topology:

Methods:

coding language used: python. The implementation was done using python scripts.

Implementation Logic:

1) In order to create barrier synchronized requests, the client opens TCP socket connections with various servers, sends the request to each server for the data chunks, then polls the connections to see if it has received the respective data chunks. Each data chunk is calculated to be 1MB/N. The servers receive the request and sends the specific chunk of bytes as dictated by the parameters in the program

2) As the client is polling, it makes sure that all the servers have sent the required number of bytes in that particular transfer. If it is received, the transaction is marked as complete.

3) Goodput is calculated as the total size of the data requested per barrier transaction divided by the mean time per barrier transaction.

Test Settings:

- Link Bandwidth: 1 Gbps

- Host and switch output queue size: 32KB

- Request Chunk per server: 1MB/N – where is N is the number of servers

- Setting RTO _min: In order to set RTO_min for a host in EC2 we used the following command:

$ sudoip route replace dev [device name] rto_min [value]ms

Results: Unfortunately our results are going to be along the lines of negatives and false positives

We have presented our graphs as shown below:

False Positives :

We have had false positives while trying to reproduce the results of the incast experiment. This kind of threw us off guard until the very end.

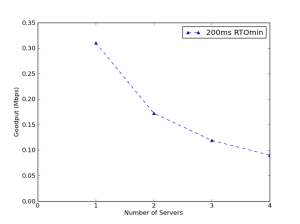

Replicating Figure1:(False Positives)

We tried to set each link to 1Gbps (as per the experiment setting) and we found out decrease in goodput with increase in number of servers. But mininet is unstable at this bandwidth and thus this result cannot be relied on. The graph is shown in figure 1b.

Figure 1: Occurrence of collapse with increase in number of servers involved in barrier synchronized transaction (this figure shows the number of servers in multiples of 10 in X axis and the goodput in multiples of 100Mb/s in y axis)

We tried for a lower value of bandwidth which is around 200Mbps and we did see retransmissions and fall of goodput. But again the aggregate bandwidth of the system (with 20-30 servers) was 6Gbps and the value could not be relied upon. The graph was similar to figure 1(b)

We then lowered the value of our bandwidth (10Mbps per link) and tried to create incast collapse. We did not find success in this case (buffer size of 1 packet, latency of 400ms). We could not see incast collapse indicated by retransmissions and drastic fall of goodput.

Replicating Figure 2: Varyingrto_min and its effect on incast collapse (Negative Result)

We have not been able to see the variation of goodput with rto_min. Initially when we encountered this issue, the first question in mind was are we even creating incast collapse with this setting. This infact led us to the problem that the bandwidth we were using (1Gbps) might not produce a valid output. So we went about trying lower bandwith values like 100Mbps and encountered the same flat graph (as shown on the right hand side). The goodput does not vary much with various values of rto_min. So we decided to to check if rto_min itself is not getting set. We used ss –i command to get the socket statistics and found that rto_min doesn’t seem to change even though we used the linux command which is supposed to set it. There are two things that could be wrong here a) either rto_min is not set correctly due to kernel problems b) since the incast collapse we created in the previous scenario is false positive, this graph is just a consequence of that.

Figure 2: Presents variation of Goodput with rto_min

Stabilizing our results/ Accounting for variation:

While running the scripts we pass a parameter called time which decides the number of seconds to run the client application for a particular value of N(number of servers). So we would end with multiple rounds of barrier synchronized transactions within the time of the experiment and calculate the average goodput for those values.

In the course of trying to find parameters that created incast collapse, we tried various latencies and buffer sizes, but this did not result in reliable changes to goodput.

Out final efforts focused on monitoring the TCP connections for retransmissions whilst using valid bandwidths. We ran simulations with 1 to 4 servers on 100Mbps links, so as not to exceed to total bandwidth of 400 Mbps which mininet is supposed to handle. We were unable to cause any retransmissions that would necessarily happen if there was incast collapse. Figure 3 illustrate a decrease in goodput that occurred even though there were no retransmissions.

Figure 3: mininet results for 100Mb/s links with 1 packet buffer sizes

Lessons Learned:

Scaling Limits:

We were bounded by the bandwidth limitations of the mininet which affected our results. Mininet results are not dependable at 1 Gbps. For lower values of

bandwidth we could not find the right combination of buffer size, block size, rtt, rto_min which would create incast collapse.

Implementation Experiences:

Initially recreating an incast like scenario looked to be quite straightforward. We obtained information from the paper on how to create such an application. So we were able to create the first graph closely resembling the actual paper. But later we realized that just recreation of the graph is not sufficient to prove that we were able to recreate the environment. We then went on to monitor the retransmissions obtained in the hosts to clarify that incast collapse has indeed happened. Even with the 1Gbps and 200Mbps links we did see retransmissions increasing with increase in number of servers. But the problem is that mininet could not be trusted at this point and we cannot say for sure that we reproduced incast environment.

When it came to the second graph, we had to encounter the tricky part of setting rto_min in microseconds for one reading on the x-axis. In order to make this feasible, we had to create our own custom kernel with the patches provided by the authors. We could not taste success in this task because of the following hurdles:

1) Initially we wanted to build our custom kernel on a linux version that’s compatible with the hr timer patches (patches are not up-to-date) – we did that, booted our kernel and it was quite stable but ofcourse we did not have mininet or openvswitch in this linuxversion. So we had to go the latest 3.2 Linux kernel which is supposed to have features like CPU BW limiting and Virtual Namespaces, which are needed for mininet.

2) We applied the hr timers patch to the latest 3.2linux kernel and built our own custom kernel. But this kernel was far from stable. Whenever we ran a program which set the rto_min value – the system became unresponsive. So unfortunately, we could not collect the reading corresponding to the hr timers

3) We tried to run scripts provided by our TA’s for bringing up the old kernel versions with mininet and openvswitch capabilities – but this also did not turn out to be fruitful. The custom kernel with hr patch did not even boot up.

Main lesson learned: Debugging in EC2 is quite a pain, especially for the kernel issues. We did not have access to the console. We were running out of time and could not try out the debugging part in an individual VM. So given a chance again , we would do all the development for getting the custom kernel up in an individual VM and then port it over to EC2.

What we would try to change if given a chance again:

Looking back I would say that we tried our very best to create an incast like environment with mininet. One thing I would like to change about our implementation is from polling to threading to make sure that we don’t falter when it comes to making synchronized reads/writes. We did have a threaded implementation in C (server and client) – but we could not finish it completely as we were bogged down by the kernel issues we encountered. One suspicion we have is that, probably the environment we created is not too good an example of synchronous reads and writes.

Tips to folks who would want to recreate TCP Incast collapse in future :

1) Probably implement the client and server functions in c and use a threaded model – just to make sure you don’t hit any desynchronization issues

2) If trying to create incast scenario with mininet , try to probe various values for buffer size, latency and bandwidth. We were so sure of our settings initially that we ended up in trouble now.

3) Even when goodput decreases – find out if it is really due to the retransmission timeouts or not. Tcpdump outputs and netstat outputs will be very useful in this

With these lessons learnt, we think the chances of reproducing incast in mininet would be better next time.

Instructions to Replicate This Experiment:

1. On Amazon’s EC2, launch an instance of cs244-mininet (ami-cb8851a2), of type c1.medium.

2. Copy the necessary Python scripts to a directory within your home directory on the instance.

3. Open a shell and go to the directory containing the Python scripts copied in step 2.

4. Execute the following commands:

$ sudochownubuntu ~/.matplotlib

$ chmod 777 *.py

The first command is necessary because .matplotlib is initially owned by root. The second makes the Python scripts executable.

5. To run the experiment, execute the following command:

$ sudo ./reproduceFigure.py

On the first execution, it will fail after a few seconds. Execute the command again and it should run to completion. The experiment may take a couple hours to complete.

6. As the script runs, it will output the goodput values it records periodically. When the script completes, three image files named figure1.png, figure2.png and figure3.png will be in the current directory. These correspond to Figures 1b, Figures 2b, and Figures 3 in this report.

The replication was really straightforward. Cloned the scripts, setup permissions and left to run. It did run for a while (about 35 minutes), but the end-result was that we got very similar figures. The exception was the last graph where our throughput actually stayed consistent.

Our results:

The figure 3 generated by the code in git is for 10Mbps bandwidth. This is the behavior we saw for 10Mbps but did not add in the blog. The goodput is constant because we could not see any incast collapse or retransmissions at 10Mbps.

In the blog, We added figure for 100Mbps. We have been trying out different bandwidths and latencies. Sorry for the confusion caused.

Thanks for replicating in spite of the delay.

Hi,

Where can I have the code to repeat this experiment ?

Thank you