Robert Gasparyan and Jongho Shin

Original Problem

TCP is the most extensively used Internet transport protocol. TCP’s congestion control algorithm is one of the most integral parts of TCP and years of research have gone into optimizing it. The key objectives of TCP are to provide good throughput to the end users while keeping the network from getting over-congested(or possible collapse) and maintaining fair sharing of bottleneck links. However, it was discovered that these conditions only hold if both the sender and the receiver follow the protocol exactly, if not, TCP will act in unexpected ways that sometimes can be harmful for the network but benefit a greedy host . Authors of this paper showed three attacks, which take advantage of the TCP’s congestion control mechanism and allow a misbehaving receiver to derive a standard TCP sender arbitrarily fast.

Motivation: Why is the problem important/interesting?

This attacks exploit TCP’s assumption that the end hosts generate correct output because otherwise they won’t be able to guarantee data integrity, but at least two of these attacks increase the rate without losing data integrity and the third attack can use new features in HTTP 1.1 to fill in the missing gaps.

Notice that the end user has a great incentive to modify TCP to misbehave in these ways because it could significantly increase throughput at the beginning of a TCP connection and since most TCP flows are pretty short this could make for a better user experience at the expense of others. But if this attacks are implemented by a bigger fraction of users it could lead to an Internet-Wide congestion collapse[1].

Results: What did the original authors find?

The authors implemented all three attacks and tested requesting index.html from a server and timed the packets sent out by the server. In Attack 1 (Ack Division) all packets are almost sent in a single burst, Attack 2 (DupAck spoofing) all but the first two segments are sent in a single burst, and for Attack 3(Optimistic acking) packets are sent much earlier than they would be under normal TCP receiver (this does not include checking for possible missing packets).

Subset Goal: What subset of results did you choose to reproduce?

We choose to reproduce all three attacks. This paper was published in 1999, TCP’s congestion control algorithm has evolved a lot since then and it is interesting to see if these attacks are still possible.

Subset Results: How well do the results match up? Explanation if you see significant differences.

We found that the first two attacks do not work in current Linux distribution(Ubuntu 12.10). But the last attack is still feasible.

Attacks:

1. ACK Division

This attack has been prevented in the current version of TCP, throughput was not affected.

2. DupACK Spoofing

This attack has been prevented in the current version of TCP, throughput got worse.

3. Optimistic ACKing

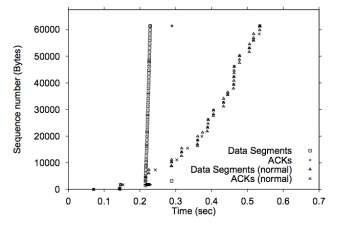

This attack is still feasible. The graph below demonstrates how optimistic acking forced sender’s cwnd to increase much faster. As a result the data transfer that would normally take 300ms completed in less 10ms.

Challenges: What challenges did you face in implementation?

To see the feasibility of attack in Linux, we choose to manipulate the Linux Kernel code. In order to manipulate the Kernel code, we made use of JProbe(KProbe). JProbe is a Linux Kernel library for instrumentation, which allows breaking into any Kernel routine dynamically. [http://phrack.org/issues/67/6.html] Using JProbe, we made a Loadable Kernel Module (LKM), which deploy the three attacks. The drawback of JProbe is that we cannot change the actual function; we can only add some routines. This limits the ability of modification compared to editing Kernel code itself, and it requires in-depth understanding of the Kernel code. However, one of the main advantages of LKM is that we can make the code portable, thus anyone can reproduce the result easily in most of Linux distributions.

*OS related challenges: In order to modify the Kernel’s behavior, we had to analyze the Kernel code first. And we had to spend a lot of time debugging the Kernel code, since debugging the Kernel code is a lot harder than debugging an application code.

At first, we used tcp_probe to see sequence numbers of the TCP flow. But tcp_probe starts to show the flow from the middle, even though we need to concentrate on the beginning of the TCP flow. Thus we should use tcpdump instead of tcp_probe.

Another obstacle was the initial congestion window. In the paper it always start from 1, but these days it usually starts from 10. Thus to mimic the environment of the paper, we should find the way to decrease it to 1. We could achieve this by the following command. ‘ip route change 10.0.0.0/8 dev sender-eth0 proto kernel scope link initcwnd 1’

Critique: Critique on the main thesis that you decided to explore – does the thesis hold well, has it outdated, does it depend on specific assumptions not elucidated in the original paper?

*The first two attacks, ACK division and dup ACK, are outdated.

RFC 5681

ACK Divison:

"cwnd MUST NOT be increased by more than SMSS

bytes per RTT. This method both allows TCPs to

increase cwnd by one segment per RTT in the face

of delayed ACKs and provides robustness against

ACK Division attacks."

DupACK Spoofing:

"TCPs now MAY limit the number of duplicate ACKs

that artificially inflate cwnd during loss

recovery to the number of segments outstanding

to avoid the duplicate ACK spoofing attack"

Even more, the RFC 3465 suggests “TCP Congestion Control with Appropriate Byte Counting (ABC)”. This RFC suggests increasing cwnd based on the number of bytes being acknowledged by each arriving ACK, rather than by the number of ACKs that arrive. This can be enabled on any Linux distribution by the following command. ‘echo 1 > /proc/sys/net/ipv4/tcp_abc’. And it will also prevent any attacks based on the number of ACKs.

Another assumption that does not hold today is that the initial congestion window does not start from 1. In Linux, it starts from about 10. So the slow start is not that slow, and we don’t need these attacks to make the sender start faster.

Another minor change is that the sequence number does not start from 1 anymore, so we had to use relative sequence number to plot the graphs.

* They did not provide an algorithm for OptACKing

Unlike the first two attacks, OptACKing can be very challenging to implement because it requires a knowledge on the attackers part about when/which segments have already been sent. Notice that it is very important to keep the attacker synchronized with the sender because if ACKs are sent before the packets left the sender, they will be ignored and the cwnd won’t increase, on the other hand if they are sent too late they won’t have the desired impact. The implementation we ended up with sends a series of ACKs with incremented ackno after each other every T seconds. T should roughly be equal to the time it takes the sender to put a packet(a whole window of packets) on the wire, this will guarantee that the OptACK arrives after the corresponding segment is sent.

Extensions: Any exploration outside the results in the paper?

The original paper does not specify which congestion control algorithm was used for the experiments. Judging by the timeline we assumed that it was Reno, but we also tried the attack against NewReno, Cubic, Illinois, Hybla, LP and Vegas. The results varied a little but the impact was similar.

Platform: Choice of your platform, why that particular choice and how reproducible is the entire setup, what parameters of the setup you think will affect the reproducibility the most?

We did our experiment on Amazon EC2 machine, and the Linux distribution was Ubuntu 12.10(Quantal Quetzal).

The entire setup will be easy on most Linux distributions, it only requires Kernel headers, mininet, and iperf 2.0.

Our Mininet topology consisted of two hosts connected through a single switch. We ran iperf server on one and iperf client on the other(in this case iperf server is the attacker because iperf checks traffic from client to server)

README: Instructions on setting up and running the experiment.

System requirements : mininet, iperf2(not compatible with iperf3), python 2.6 or above, linux kernel headers

For security reasons, we do not publish the code. Please contact robertga@stanford.edu or jongho@stanford.edu for the code and instructions to reproduce the experiment.

We give a score of 5. Robert and Jongho emailed us instructions for reproducing their results. We were able to reproduce their plots easily, using a c3.large instance and the default cs244 ami, and with little additional effort.

Thanks,

Alex Eckert & Vikas Yendluri