Elmer Le & Sam Keller

1. Goals: What problem was the original paper trying to solve?

The core underlying issue in this paper is that a good portion of TCP traffic today consists of very short flows. These flows finish transmitting data within a few round trips, few enough that TCP’s slow start phase has not even completed yet. This leads to several subproblems, outlined in the paper:

- The primary result is that latency could be shorter by several round trips, since the TCP flow spends several round trips with very small congestion window sizes.

- Modern browsers use many simultaneous TCP flows to download large webpages quickly, which means a good portion of the load time is spent waiting for new flows to ramp up in slow-start.

- Short flows get the short end of the stick when competing with other large bandwidth-filling TCP flows.

- Whenever TCP needs to redo the slow-start phase, it takes a few RTTs longer to get its congestion window back up.

The paper proposes increasing TCP’s initial congestion window from the usual 3 segments to 10, which would alleviate all of these issues. In particular, they focus on measuring latency improvements from Google data centers with existing traffic flows. This is sufficient to show that increasing the congestion window gives significant performance improvements in practice for most network configurations.

2. Motivation: Why is the problem important/interesting?

The paper notices that, while the Internet and networking have grown dramatically in the last decade, TCP’s initial congestion window has not changed at all. It aims to update TCP to the modern networking environment to reduce web latency. Remarkably, its method of increasing the initial congestion window is minimally invasive, and can be configured on a per-host basis without needing any architectural changes.

3. Results: What did the original authors find?

The authors monitored performance at large datacenters over two weeks: one week with standard initial congestion windows, and another week with increased windows. They found that, for all types of traffic, latency improved. The amount of improvement depended on the specific flow characteristics. (Low-bandwidth and long-RTT flows showed the most improvement.)

4. Subset Goal: What subset of results did you choose to reproduce?

We tried to replicate the first two graphs in figure 5 of the paper, which shows how latency improves with varying RTT, bandwidth:

The paper also included a third graph, measuring average latency when bucketed by BDP, or bandwidth-delay product. We decided not to include an exact version of this graph, since we could not model the distribution of RTT and bandwidth accurately enough to give anything meaningful; instead, we produced a heatmap that shows the same trends without requiring any understanding of the network distribution. More detail on this choice is in the results section.

5. Subset Motivation: Why that particular choice?

Those graphs directly show the relationship that the paper is trying to improve across all types of flows, by showing that latency strictly improves when using an initial congestion window of ten segments. Compared to the other experiments in the paper, this result has the broadest relevance to networks outside of Google, as well as being the best indicator for the success of the proposed change.

6. Subset Results:

We ran a similar simulation in Mininet, where we had three hosts connected to a switch: a host and two clients. One client repeatedly requests data of varying sizes from the server, and we time the latency of these requests. The other client opened a long iperf connection with the server to simulate competing traffic.

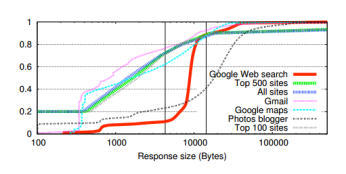

We created an experiment with this topology for each initial window size (3 or 10 segments) and each combination of RTT and bandwidth, from the buckets used in the graphs in the original paper. To simulate the variation in response size seen on the web, we sample from the CDF of all web traffic given in the original paper (included below), and measure the improvement in latency.

This experiment gave the following improvements, given as a percentage improvement in average latency:

Using the bucketed data, we recreated the two original graphs. We assumed that of the subnets measured by the original paper, RTT and bandwidth were independently distributed. This is not a particularly strong assumption, but it is the only possible solution without inventing a distribution on our own. These graphs are a weighted average of the latency improvements of the columns and rows, respectively, of the above graph, where the weights are equal to the frequency of the buckets (listed with each bucket on the original graphs).

First, notice that the results for all experiments with an RTT of 1000 milliseconds are unpredictable and follow no particular pattern. Unlike all other experiments, which are remarkably consistent from run to run, the 1000ms trials that we ran have high variance in their outcomes. They still seem to follow the same overall trend, but appear to be more susceptible to random packet drops that distort the results from run to run. This is discussed more in the challenges section. The corresponding bar is included in the RTT graph for completeness, but these experiments were ignored when producing the bandwidth graph.

The primary result of the paper, that both absolute and percentage improvement increase as RTT grows, still holds strongly. In fact, not only were we able to replicate the trend, but the RTT absolute numbers match almost identically with those given in the paper (ignoring the anomalous 1000ms case). We also see that the second result, that improvement also increases with BDP, holds: the heatmap shows that for a fixed latency network, increasing the bandwidth also allows better performance for a larger initial congestion window.

Our graph of the trend over different bandwidths does not match with the trend in the original, but it is easy to explain. We believe this is the result of our flawed assumptions about the relationship between latency and bandwidth in the subnets. While we assumed that they were independent, it is likely that a network with higher bandwidth would also have lower latency. This means that most of the traffic falling into the rightmost bucket in the Google dataset would also have less improvement than we measure in our setup. Instead, this second figure mainly displays the advantage of a network with a higher BDP.

7. Challenges: What challenges did you face in implementation?

The biggest difference between our simulation and the paper’s experiment was that they were able to monitor real traffic in a large datacenter, whereas we needed to come up with our own distributions for flow characteristics. We did our best to infer distributions of flow characteristics from the paper, but this is definitely not perfect. For example, the paper provides bucket distributions of RTT and bandwidth, but these are likely not independent. We assumed independence for producing our RTT and bandwidth graphs, since it wasn’t core to the overall trend of the graph. However, dependence was one reason we elected not to reproduce the BDP graph — the codependence of RTT and bandwidth is too important to the distribution of BDP buckets, so we would not be able to get an accurate distribution.

Early experiments gave good measurements for all smaller RTT cases, but with two outliers at RTTs of 1000ms and 3000ms, discussed below.

As we mentioned in the results section, simulations on networks with a round-trip latency of 1000ms varied greatly from trial to trial, and did not always follow the expected overall trend. We spent a lot of time investigating the flows that we created in this simulation, but were not able to come up with any meaningful differences between this and the lower latency networks that were possible causes. The results are included for completeness, but at this time we cannot explain why it does not agree with the rest of the data.

Simulations on networks with a round-trip latency of 3000ms consistently returned no improvement, regardless of network bandwidth; in other words, each request took exactly the same amount of time, regardless of initial window size. From looking at TCP dumps of the flows, we suspect that this is a property of TCP on high-latency networks: the default initial retransmission timer for a new connection in Linux is 3 seconds, which means that any request on this network is guaranteed to be retransmitted before an acknowledgement is received. Resolving this would require us to modify and recompile the Linux kernel with a larger timeout. We decided not to do this for a couple reasons:

- As we can see from the distribution of the networks, there are very few subnets with latency at or above three seconds (less than 1%). This means that this data is not representative for most networks, and can safely be ignored.

- Simulation time is dominated by the latency of individual requests, and waiting for a curl to terminate. By removing these simulations from the experiment, which are much longer than all others, we were able to increase the number of trials run in other experiments and produce more accurate results.

We also tried to run multiple RTT/BW configurations simultaneously by having multiple clients make requests to the server simultaneously. However, we could not produce sensical results this way. We suspect the reason has to do with Python’s multithreading limitations. The GIL prevents us from using true parallelization using threads, so that might have thrown off curl timings. We also tried to use multiple processes, but that produced very strange results as well. We were left to run configurations serially, resulting in a long overall runtime for the simulation. Another potential improvement that we didn’t get to fully try out is to parallelize at the experiment level rather than within each experiment. We would try to run multiple Mininet instances simultaneously.

Lastly, in our experiment design, we used cURL to make requests. In the paper’s experiments, the datacenter timed latency by opening a connection with a client, sending it a bunch of data, and then waiting for the return acks, so the server was the one initiating TCP connections. Our setup uses cURL from the client to open a TCP connection to the server. However, the overall pattern is similar enough that we felt it should be sufficient to replicate the behavior in the paper.

8. Critique

Overall, the paper’s claim that using an initial congestion window of 10 rather than 3 improves latency holds true. This seems to be pretty simple — it takes less RTTs to transmit data because we have more data in transit at once. When we increase the RTT of a flow, the percentage and absolute improvement both increase in a similar manner to the paper. However, when we increase bandwidth, our trend looks slightly different.

9. Platform

We reproduced this research on Mininet, because we were familiar with the environment and it supported all the features necessary for the project. We used the same EC2 instance as in earlier projects, which is more than sufficient for the topology we used in our experiments. This makes it incredibly easy to reproduce.

10. README:

To run this simulation:

- Launch an EC2 instance using the community AMI “CS244-Spr15-Mininet”. Make sure port 22 (ssh) is open in your security group.

- Clone the repo: https://github.com/elmerle/cs244-3.git

- Run ./run.sh from the cs244-3 directory (requires sudo).

This will run the experiment and save the plots to the output/ folder. The simulation takes on the order of an hour to run. If you have window forwarding set up, you can also enable the -s flag on the last line of run.sh to display the graphs as they are created.

Excellent job! Your results were extremely easy to reproduce and we felt that the heatmap was a very convincing presentation choice in comparison to the paper you did your project on. We thought that the effort your team put into differentiating your project from the paper was fair and well noted. We found it interesting and are rather curious as to how closely your estimations matched up to the empirical distributions from the data center. (5/5)