Team: Gregory Hill, Chinmayee Shah

Introduction

The original and prevailing way internet switches buffer packets until their internal memory is full and then ignore packets that arrive once the memory is full (hence dropping the tail of the buffer) is referred to as drop-tail queuing. This approach, while simple, can perform poorly in practice. More recently, due to cheap memory and a desire to maximize link utilization, there has been a widespread use of large buffers that can cause a single switch to delay packets for an excessive amount of time (without being dropped, which is the primary indicator of congestion for TCP). This in turn adversely affects latency sensitive applications such as games, voice-over-IP, and short flows. This problem of excessive queueing delay due to consistently large buffering is referred to as bufferbloat, and arises at switches with bottleneck outlinks.

One way of attacking the problem of bufferbloat is to use active queue management techniques, which can mark or drop packets before the switch buffer is completely full. A famous early algorithm for AQM, RED, ended up being hard to configure properly and did not work well in certain situations such as bursty flows. CoDel is an AQM algorithm introduced in 2012 by Kathleen Nichols and Van Jacobson, that works well for many different flows. A primary goal of CoDel is to work well in many scenarios without the need to set parameters. CoDel focuses on the minimum queue delay packets experience within a given interval. If the time in the queue for packets stays above a target for a period of time, packets are dropped with increasing frequency until the queuing delay goes below the target again. Nichols and Jacobson analyzed CoDel in a 2012 article in ACM queue showed the median packet delay and link utilization were both significantly better in CoDel than drop tail queuing or RED for varying bandwidths.

Commendably, the code and scripts used in the ACM queue paper is available to reproduce the results of the paper directly at pollere-codel. However, we had a desire to evaluate the CoDel algorithm itself more independently. To do this, we used the mahimahi network emulation suite, recently accepted into Debian GNU/Linux. Our results show similar performance to the performance numbers in the original paper, even on a different platform and using a stock Linux distribution (Ubuntu 14.04) networking stack and different FTP using twistd for our FTP server and wget as the client. We show our reproduced results, highlight the places where CoDel could perform better, and additionally show the results of running CoDel with a much more unstable 4g cellular trace.

The CoDel Approach in Detail

CoDel is an active queue management technique that aims to reduce the problem of bufferbloat by monitoring the amount of time that packets stay in the queue and statistically dropping some packets when they stay in the queue for excessive amounts of time. In practice, traffic is often bursty, and the queue management technique should accommodate bursts, that is, allow packets to stay for a long time sometimes, during bursts. Dropping packets when there is a burst or when new connections are setting up defeats the purpose of a queue. To address this issue, CoDel checks if the queueing delay, or sojourn time has been above target for some interval of time, before starting to drop packets. When CoDel drops a packet, it enters a dropping cycle, and starts dropping packets at increasing frequency, by reducing the time to drop next packet inversely to square root of number of packets dropped, till the sojourn time goes below target. When the queueing delay goes below the time to drop packet, CoDel exits the dropping cycle. CoDel re-enters the dropping cycle when the sojourn time exceeds target for interval amount of time, and uses the previous dropping frequency to determine the new dropping frequency.

CoDel requires setting only target and interval, and works well not only for short flows but also for long TCP flows and bursty traffic. In contrast, other AQM algorithms like RED work only for short flows, or are hard to configure properly. CoDel works for any link rate, does not require tuning any knobs (except for interval time and target time), and does not need specific information such as queue size, average size, link rate. The interval is related to RTT, and the experiment uses 100ms, which seems to work well across a range of RTTs from 10 ms to 1 seconds, with good performance in the range from 10 to 300 ms. The target is related to acceptable queueing delay. The paper claims that below a target of 5 ms, utilization suffers for some conditions and traffic loads, and above 5 ms there is very little or no improvement in utilization.

Because CoDel uses only sojourn time, work needs to be done only when packets are enqueued and dequeued – time stamping, computing sojourn time when packet is dequeued, and determining whether to deliver the dequeued packet, or drop it and if to enter or exit a dropping cycle. This makes it possible to have a simple and efficient implementation without any need for synchronization primitives like locking.

CoDel Results

The original CoDel paper uses the ns-2 network simulator for its evaluation. The paper presents median delays and link utilization for different RTTs and link bandwidths. The experiments use FTPs (long flows) with and without background web traffic and constant bit-rate applications. The web traffic consists of TCP traffic generated by many client server connections, and is simulated by using PackMime to set up connections at a specified rate. At low bandwidths, CoDel and RED have similar median delays and utilization. At higher bandwidth, CoDel has greater link utilization, indicating that RED is very aggressive in controlling delays and dropping packets. CoDel consistently achieves link utilization of around 0.9 or more.

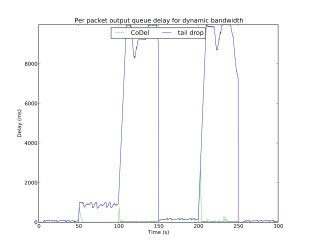

Another evaluation shows CoDel’s performance when link bandwidth varies dynamically. This experiment simulates a wireless setup, with link bandwidth increasing or decreasing every 50 seconds. A drop in link bandwidth causes the queue at bottleneck switch to build up, but CoDel is quick in dropping packets and adjusting, so that the delay stays small. CoDel’s delay is consistently less than simple tail drop or RED. CoDel has better throughput than RED, and similar to taildrop. Cumulative bytes vs time for CoDel however trails behind taildrop slightly (and by a constant amount in each segment), because taildrop fills up queues at the expense of delay. This experiment uses four FTPs, and five web connections per second.

The paper also presents delay vs RTT and fairness computed using Jain’s fairness index, for CoDel and RED. CoDel is consistently better on the fairness index, because of the randomness involved in dropping packets.

CoDel Under Dynamic Bandwidth and for 4G Traces

The code and scripts used in the ACM queue paper is available to reproduce the results of the paper directly at pollere-codel. However, we had a desire to evaluate the CoDel algorithm itself more independently, using the Mahimahi network emulator.

We chose to reproduce the results of the experiment that varies bandwidth every 50 seconds, because we wanted to study and verify CoDel’s performance, the time it takes to adjust and the impact of dropping packets on delay and throughput for realistic situations. We felt that varying the bandwidth every 50 seconds was too slow for actual WiFi setups where CoDel would be used, and hence, we also ran the same experiment with 4G traces. While 4G traces reflect the nature of cellular networks, and may have more variation due to multipath fading and moving obstacles outdoors, we consider it a stress test for CoDel.

Implementing CoDel in Mahimahi

We were inspired by the idea that an algorithm like CoDel was simple enough to be implemented in another environment to give us more confidence in the results of the paper. To do this we used a new network emulation tool called mahimahi, to recreate the experimental setup in the paper. Mahimahi uses containers to create composable shells that can add a fixed rtt or emulate a lossy or time varying bandwidth link with different queuing disciplines. Anything that can be run in your terminal window can also be run in a mahimahi container, which allows new network scenarios to be created with ease. To evaluate codel, we had to implement it as a queuing type for a mahimahi link shell. Our implementation of CoDel was based on the pseudo code in an Internet draft from April 2015 (here). The pseudo code in this draft is very similar to that in the appendix of the original CoDel paper. The code is available on the codel branch of mahimahi on github.com/greghill (here). Our code used 100ms interval and 5ms target, as described in the original ACM queue paper and specified in the Internet draft that describes CoDel.

Experiment Setup

The paper describes the number of FTP connections and rate of web connections per second, and the way bandwidth at bottleneck link is varied. The wireless link has a nominal bandwidth of 100Mbps, which is subject to variation due to degradation. The bandwidth is 100Mbps during first 50 seconds, 10Mbps for next 50, and 1Mbps, 50Mbps, 1Mbps and 100Mbps for the following 50 second segments, in that order. There are 4 concurrent FTP connections (to simulate long flows) and 5 web connections (response, request) per second.

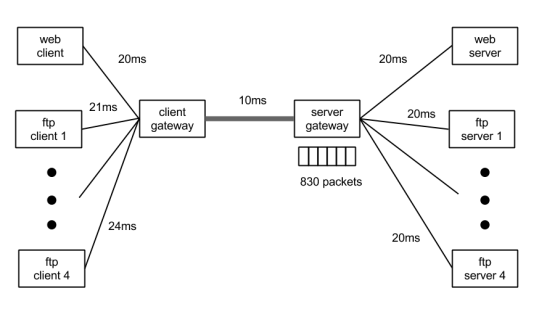

From the description, the exact topology between servers and clients, used for the experiment, was not clear to us. On a closer inspection of the Tcl code here (used for the original experiment), we realized that the experiment uses a server gateway, a client gateway, and a bottleneck link between the two gateways. There is a single web server, a single web client, and a different FTP server and client for each FTP connection. The figure below illustrates this setup. The thick gray line between the gateways is the bottleneck link. The FTP flows and responses from web servers flow towards the client, that is, right to left. The experiment uses a nominal delay of 10 ms between the two gateways, and approximately 20ms between clients and client gateway, and servers and server gateway. This means a nominal RTT of 100ms, which gives a bandwidth-RTT (mentioned as bandwidth-delay in paper) product of about 830 packets for a 100Mbps link (subject to degradation) and 1500 byte packets. The switch in the link has a buffer to accommodate 830 packets for droptail.

The tcl code uses slightly varying delays for ftp clients (20 + client number). These delays do not seem to vary enough to simulate a realistic variation. We believe this variation in delay is to avoid synchronization of flows in ns2.

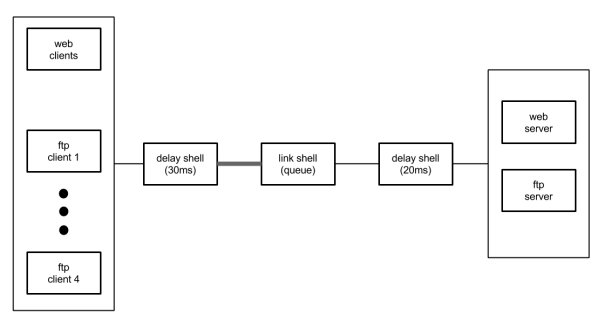

It is a little tricky to reproduce the same topology in Mahimahi. In Mahimahi, we create delay shells to emulate delay, and link shells to emulate queueing policies. After discussions with Prof. Keith Winstein, it seemed that the best way to reproduce the experiment in Mahimahi was to use a tree topology, where a delay and link shell can be shared by multiple endpoints. Accordingly, we created the following topology in Mahimahi:

We create delay and link shells using mm-link and mm-delay in Mahimahi. We launched an ftp server and a web server outside the Mahimahi shells. We created 4 concurrent FTP requests using 4 processes. We create a web request every 0.2 seconds, to reproduce the experiment as closely as possible. Also, it was not clear to us, the distribution of request response sizes used by PackMime. So we created constant request responses, where the request is for a web page that is around 800kB (wall street journal homepage). The thick gray line represents the bottleneck link for the link shell.

We tested the link shell in Mahimahi with two different queues – CoDel and droptail, for two different traces. To reproduce the experiment, we made a trace that has constant bandwidth for 50 second segments – 100Mbps, 10Mbps, 1Mbps, 50Mbps, 1Mbps, 100Mbps. The bandwidth in the other direction was a constant 12 Mbps the entire time. We also ran an experiment with a Verizon LTE uplink and downlink trace from the mahimahi package.

Results

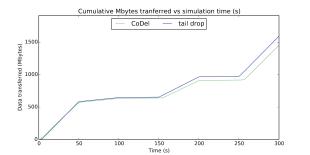

Our reproduction of Figure 7 in the ACM queue paper look very similar to the original. The figure below is taken from the paper, and shows delay and cumulative bytes transferred, vs simulation time. We implemented CoDel in Mahimahi, and compared it to tail drop (interchangeably used with the term droptail in this section).

Paper

The two figures above show the delay and cumulative bytes transferred for a our experimental setup in Mahimahi. Results from our experiments are very close to the results from the paper, even with a slightly different setup. Tail drop introduces extremely large delays when the bottleneck link bandwidth drops because the queue builds up until packet losses or timeouts occur at the endpoints. CoDel delays on the other hand do not spike too high and, when they do, are quickly brought down.

CoDel’s reduced throughput

The downside of CoDel is that throughput is reduced. The paper downplays this, saying that “long delays are not required for high utilization” and that “CoDel transfers almost the same total kilobytes as Tail Drop, with the differences coming at the rate jumps.” While this is partially true, the graph of cumulative bytes transferred seems to have been chosen because it makes this reduction in throughput look very minimal: the lines track each other closely. However, cumulative bytes transferred is somewhat deceptive. Measuring the pixels on chart 7c, “almost the same total kilobytes” was actually ~10% less bytes transferred. Given the 100x reduction in packet delays at times this is a very reasonable tradeoff, but it is a tradeoff nonetheless.

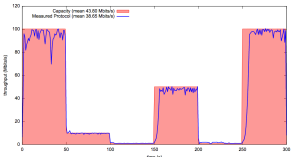

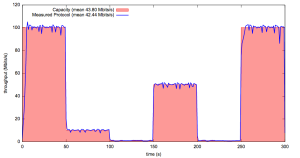

This reduction is fairly significant when you consider the theoretical maximum that could be transferred in that time period. While tail drop sends 97% of the maximum possible bytes it could during the trace, CoDel only sends 88% of the maximum possible bytes. Below we graphed the throughput going into and out of the switch using mahimahi’s plotting scripts (why the graphs look much nicer). When the ingress throughput exceeds the egress throughput, the queuing delay within the switch increases. Here we get a better idea of what CoDel is able to achieve, as the ingress almost never exceeds the capacity of the link, thus the queuing delay is minimal. CoDel brings the tcp sawtooth of traffic ingress almost entirely below the capacity of the link. On the other hand, the tail drop switch ingress traffic sawtooths above and below the capacity of the bottleneck link, leading to much higher delays but maximizing the capacity of the link. So the paper is correct that a significant source of throughput loss is at the transition points, but at least in our traces there is also a clearly lower utilization with CoDel even at a constant bandwidth. Some bitrate loss seems inevitable without changing the sending characteristics of TCP, as to maximize the link bandwidth with a sawtooth sending curve, the link must be oversubscribed and queued at the high times so that the queue won’t drain at the bottom of the sawtooth.

CoDel switch ingress and egress throughput

Tail drop switch ingress and egress throughput

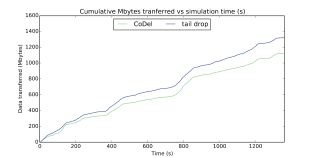

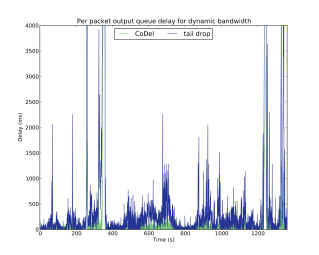

4G

When running the same benchmark on the Verizon 4G trace, we see how traditional TCP really struggles with highly variable connections. CoDel’s delay stayed below the tail drop delay, but seems to spike, and the delay at any time is more for taildrop than CoDel. The cumulative bytes for droptail is around 12% more that for CoDel, at the end of the experiment, which is a little more than the WiFi experiment from the paper (which was 9.6%). Cellular networks are probably a bad fit for CoDel because of the very different propagation characteristics and huge variation with time. We did not have WiFi traces to test CoDel for realistic situations, and hence, we stress tested CoDel with the 4G traces.

Experiences with Mahimahi

The mahimahi emulator was very convenient to use. The composable shell abstraction allowed us to recreate a complex topology by simply running a command prefixed by others. However, Mahimahi is limited in its ability to easily simulate some topologies: A tree of mahimahi shells can talk to levels below them, but communicating to a process in a shell requires forwarding entries to manually be set. As a result, we were not able to create the exact topology from the experiment in the original paper in Mahimahi, that is, share the link shells with multiple shells on two sides, but we did not find that to be a problem in creating a conceptually similar topology.

Discussion

Recreating the experiments of the paper on a different platform and using a network emulator vs a simulator, we still were able to replicate results from the paper. This is quite impressive to us, and gives us more confidence that the paper as a whole was technically sound and the results were not significantly influenced by their choice of platform. We felt the choice of cumulative bytes downplayed the throughput losses caused by CoDel, we showed these more clearly by graphing the ingress and egress throughput to the switches in the experiment. Overall though, CoDel seems like a worthwhile tradeoff, sacrificing some overall throughput for drastically reduced latency without needing to modify the networking stack at the endpoints.

CoDel seems to be extremely easy to tune, with just 2 parameters, interval, which is closely related to RTT, and target, which reflects the acceptable delay. We did not have enough time to study variation in CoDel’s performance with different interval values and RTTs, but that would be an interesting direction to explore if we had more time.

CoDel performs better than tail drop but has some trouble on the 4G trace we ran. While a 4G trace represents a very different network model, it would be interesting to see how CoDel performs in more realistic network conditions, as a standard wifi connection bandwidth is probably far from a step function with 50 seconds of constant bandwidth at each step. As can be seen in the throughput graph, CoDel is slower than droptail at adapting to changes.

Instructions on Replicating the Experiment

We have created a custom AMI that has Mahimahi and all dependencies installed. There are 2 scripts, one to run the setup from paper, and one to run 4G traces. These are called start-paper-experiment.sh and start-4g-experiment.sh respectively. These scripts will create the servers, build the topology, launch clients, cleanup, and plot results.

Alternatively, you can clone the github repostirory github.com/greghill/mahimahi, and check out the codel branch. The code is here: https://github.com/greghill/mahimahi/tree/codel. The install script in scripts directory, install.sh will install all dependencies and make the installation. The script should not be run as root, but the script will ask for root privileges when required.

We encountered some issues with cleaning up sometimes, which we could not fix — we simply pkill wget, twistd, and python to kill all the web and FTP connections and servers after the experiment.

NOTE: Since we did not want to edit the README file in Mahimahi project root directory for CoDel, we have added a README file in scripts directory, under the project root directory.

Using EC2 AMI

- The AMI is cs244-spring15-codel. Launch this AMI on c3.2x instance. Most results can be reproduced with a smaller instance too, but we wanted to ensure that we have enough compute power for the entire experiment, and hence use a larger instance.

- Change directory to mahimahi, and pull to make sure that all updates are available on the AMI. There may have been small updates to compile the results and plots, but you do not need to reinstall anything.

git pull origin codel - Change directory to scripts under mahimahi and run the experiment scripts. You will be prompted for root password.

– cd scripts

– ./start-paper-experiment.sh (note you do not need to sudo, the script will ask for sudo privilege when necessary)

– ./start-4g-experiment.sh - Each experiment script will save plots to the scripts directory, and will also open the plots for you to see. The plots are saved as delay*.pdf and mbytes*.pdf. Note that we plot Mbytes and not Kbytes.

NOTE: In order for the plotting script to work, you need to ssh with -X option.

Build from a scratch Linux image

Mahimahi requires Ubuntu > 13.10 (http://mahimahi.mit.edu/). We ran our experiments over Ubuntu 14.04.

- Clone the repository mahimahi and check out codel branch:

git clone https://github.com/greghill/mahimahi

cd mahimahi

git checkout -b codel

git pull origin codel - Change directory to scripts under project root directory and run install. This will install dependencies and make install. You will be prompted for root password.

cd scripts

./install.sh

(NOTE that you do not need to sudo, the script will ask for sudo privilege when necessary) - Run the experiment scripts in the scripts directory. You will be prompted for root password.

./start-paper-experiment.sh

./start-4g-experiment.sh

(NOTE that you do not need to sudo, the script will ask for sudo privilege when necessary) - Each experiment script will save plots to the scripts directory, and will also open the plots for you to see. The plots are saved as delay*.pdf and mbytes*.pdf. Note that we plot Mbytes and not Kbytes.

NOTE: In order for the plotting script to work, you need to ssh with -X option, if running remotely.

References

- Nichols, Kathleen, Van Jacobson, Rick Ratzel, Rodney Greenstreet, Thomas A. Limoncelli, Dennis Abts, Bob Felderman, and Luigi Rizzo. “Controlling Queue Delay.” http://queue.acm.org/detail.cfm?id=2209336

- Mahimahi network emulator http://mahimahi.mit.edu/

- Active queue management and packet scheduling Internet-Draft https://tools.ietf.org/pdf/draft-ietf-aqm-codel-01.pdf

- Tcl code for original CoDel experiments https://github.com/dtaht/ns2

- CoDel Wiki http://www.bufferbloat.net/projects/codel/wiki/Wiki/

- Pollere Inc http://pollere.net/

Nice paper. One other solution to the delay problem you should note is the use of delay based TCP congestion control.

It’s not possible to get delays as low as Codel tries to when using standard TCP congestion control and RTTs are high without some loss in throughput. If you increase ‘target’ you can reduce this problem.

This is interesting work and I’ll have to check out mahimahi. But I do want to caution enshrining the original CoDel article too much: that was rushed out under pressure from Jim Gettys (who was right, of course) and, at the point of publication, had only the input of the two co-authors. We see that as the first stake in the ground for on-going work with lots of contributions from others. I also want to caution not to worry overmuch about duplicating weird simulator conditions. My intent was to exercise CoDel as much as possible, not to claim the simulator is the real world.

I think the issue of throughput is a complicated one. If the “throughput” for bulk data flows is reduced a small amount but enable interactive applications to actually work, this may not matter. And, the work of fqcodel with multiple queues improves performance. Using a small number of different queues can cure ack problems and also put bulk flows together. This does delay bulk flows of course but overall performance is improved. (But just because a few queues is good does not mean that more queues must be better!) Determining what constitutes good performance is not an easy task.

CS 244 Peer Eval Score: 5/5

We were able to reproduce the same plots as the blog post and the custom AMI provided made setup very convenient.

Also, we tried the fresh-install method. There was this error when running ./install.sh:

make[2]: Entering directory `/home/ubuntu/git/mahimahi/scripts’

make[2]: *** No rule to make target `mm-throughput-graph’, needed by `all-am’. Stop.

We fixed it by checking out the old files:

$ git checkout 74c7ad4 — mm-throughput-graph mm-delay-graph

And everything was good as gold. An easy fix, doesn’t change our score. 🙂

Thanks! We moved some plotting scripts to a separate directory, which seemed to have broken the installation and went unnoticed. Your fix is perfectly good (though the old script will not be invoked).