by Andrew Lim and Ryan Hermstein

Introduction

The rise of smartphones and tablet devices to ubiquity in recent years has spurred rapid growth of cellular network traffic. Given the distinct properties of cellular links, they deserve their own sorts of techniques for managing network capacity and resources. In particular, congestion control mechanisms must be rethought to fit cellular networks. Traditional TCP variants do not perform well on rapidly-changing cellular channels [3], failing to take advantage of all available capacity and suffering high end-to-end delays.

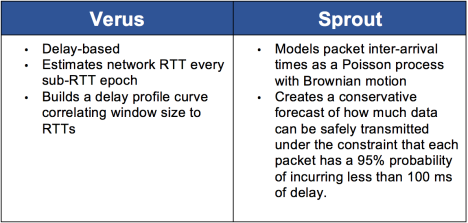

The paper we consider, Adaptive Congestion Control for Unpredictable Cellular Networks, describes Verus, an end-to-end congestion control protocol that attempts to adapt to rapidly changing cellular network channels. Many of the experiments in this paper compare Verus to Sprout, which is another recent congestion control protocol specifically designed for cellular networks. A primary difference between the two algorithms is how they choose to model the characteristics of the cellular channel. We summarize the key elements of the two in the following table:

Table 1: Verus vs. Sprout Algorithms

The differences in these algorithms cause them to achieve different performance metrics. In real-world experiments, Verus achieves up to 30% higher throughput as compared to Sprout. Zaki, et al. do not perform a trace-based comparison of Sprout and Verus since Sprout is not compatible with the OPNET simulator that they use. These real-world results would be tough to reproduce due to differences in cellular infrastructure/observed network load; the original Verus experiments were run over cellular networks in the United Arab Emirates.

Instead, we have chosen to focus on an additional throughput-delay comparison between Sprout and Verus from the paper. The experiment is used to compare how the two algorithms adapt to rapidly changing network conditions; over a varying link with a maximum capacity of 20 Mbps, throughput, loss rate, and delay are changed every five seconds. Verus achieves higher average throughput than Sprout but also incurs much higher delay.

Figure 1: The original figure from the varying link experiment comparing Verus and Sprout.

The subset of results that we choose to reproduce are those in Figure 1. In the original paper, Verus is also compared to common TCP variants such as Cubic and Vegas. We choose to focus exclusively on the comparison with Sprout since the it is the only other algorithm included in the comparison that is designed with cellular networks in mind. This experiment is a true test of how adaptive these congestion control algorithms are because the link varies every five seconds. Furthermore, it is possible to replicate this experiment in Mahimahi, a network emulation tool. This also fills in a gap in the original paper because the authors were unable to run trace-driven comparisons between Verus and Sprout.

Our Results

In order to replicate the Verus variable network experiment, we generated a synthetic trace where the link capacity changes every five seconds varying between 2 Mbps and 20 Mbps. From our experiments on this trace, we find that Verus does achieve higher throughput than Sprout (Figure 2); Verus has an average throughput on the synthetic trace of 11.38 Mbps whereas Sprout has an average throughput of 9.38 Mbps. Verus, however, suffers much greater signal delay than Sprout (Figure 3); Verus has a 95th percentile signal delay of 744 ms, whereas Sprout has a 95th percentile signal delay of 40 ms. The results we see for Sprout are similar to those presented in the original Verus comparison, but the Verus results we observe have some differences. In terms of throughput, we observe that Verus is often over-aggressive and transmits at a rate greater than the available capacity of the link. When Verus floods the link in these scenarios, the delay is significantly increased due to dropped packets at the queue.

Figure 2: Throughput time series of Sprout and Verus over varying link trace.

Figure 3: Delay time series of Sprout and Verus over varying link trace.

We notice that in our experiment and the original Verus experiment, Verus builds up a large queue almost immediately, which leads to very high delays. In our experiment, however, Verus does not recover from this high delay. We believe that this is a result of poor parameter tuning. In particular, we find that the default Verus slow start timeout is 500 ms. This seems particularly high, given the benchmark sub-100 ms latency required for interactivity. Another, parameter that we believe needs further analysis is the epoch duration. The default Verus implementation uses a 5 ms epoch. We believe, however, that this epoch is too short and results in too frequent adjustments in the network RTT estimate, which results in susceptibility to noise and other transient events. To investigate whether a better throughput-delay tradeoff could be accomplished with Verus, we ran Verus with modified parameters of 200 ms for the slow start timeout and an epoch duration of 50 ms. With these parameters, we see that Verus still achieves better throughput than Sprout (Figure 4) and delay is much lower than Verus with the default parameters; Verus with these modified parameters has a 95th percentile signal delay of 247 ms as compared to the 744 ms of default Verus. These results appear to be much closer to those presented in the original Verus paper, although the Verus delay we observe is still higher.

Figure 4: Throughput time series of Sprout and Verus with modified parameters over varying link trace.

Figure 5: Delay time series of Sprout and Verus with modified parameters over varying link trace.

Challenges

- Integrating with Mahimahi – The source code for both Sprout and Verus is publicly available with clear instructions indicating which dependencies to install and how to configure and build sender/receiver executables. Integrating Sprout with Mahimahi is fairly trivial, but integrating Verus presented some complications. Verus uses separate sockets for its initial handshake/connection establishment and for its actual data transfer. After some experimentation/logging, we realized that these two sockets ended up communicating using the same IP address/port pairing. We are not exactly sure why the Verus code is structured in this manner, but we modified the client and server to use the same socket for the handshake and data transfer. After making this modification, we were able to run Verus with Mahimahi.

- Variable Network Trace – The experiment that we have attempted to reproduce varies network conditions (throughput, loss rate, delay) every 5 seconds. The Verus paper, however, does not describe in detail the policy used to change their network conditions; the network changes appear to be random within given boundary thresholds for the different parameters. For our version of the experiment, we had to create our own trace file that is compatible with Mahimahi. To do so, we had to understand the format of Mahimahi trace files; Mahimahi trace files allow you to specify when packets can be sent out (allowing you to vary throughput over time). After we understood how these traces are structured, we generated our own trace by writing a Python script that chooses a random throughput from 2 to 20 Mbps every 5 seconds, just like the Verus experiment. Unfortunately, Mahimahi does not allow you to dynamically vary loss rates and delays while running a trace. Mahimahi has delay and loss shells, which are containers that allow you to specify a fixed delay and loss rate, respectively. These values are fixed, however, at the time the shell is instantiated.

- Queueing Policy – The original Verus experiments run over traces incorporated a router with a queueing policy known as Random Early Detection (RED). Mahimahi only comes with infinite, drophead, and droptail queueing built in. In order to recreate the setup from the Verus paper, we had to modify Mahimahi so that a link shell could support RED. We were able to successfully augment Mahimahi to use RED with the given parameters from the Verus paper.

- Results Graph – Mahimahi is able to produce a throughput graph for a single log file. However, we needed to visualize the throughput of Sprout and Verus on the same plot. Working from the original throughput graphing script, we extended it so that it could take two log files and graph throughput for Sprout and Verus on the same plot.

Extensions

The Verus paper made a bold claim in its introduction: “In comparison to Sprout, Verus achieves up to 30% higher throughput in rapidly changing cellular networks.” We wanted to see if this was true in a modern real world example, especially since cellular networks nowadays can support higher throughput, which is an advantage to Verus over Sprout (since Sprout focuses on minimizing delay). To test this, we used a network trace that Keith collected while driving on the nearby Alma Street. As with the variable network trace, we see similar trends. Verus tends to exploit available capacity much better than Sprout, but also incurs significantly greater delay.

Figure 6: Throughput and delay time series for Sprout and Verus on Alma St. trace.

Critique

Based on our experiments, we find that Verus does achieve higher throughput than Sprout, but the additional incurred delays significantly outweigh the gain in additional throughput. The original paper’s implication that Verus achieves a different outcome on a similar throughput-delay frontier as Sprout does not appear to hold in our setup; rather, Verus appears to lie on a much less favorable throughput-delay frontier. Although the ideas of the Verus algorithm, in particular, its interpolated delay profile, seem promising, we feel that more attention needs to be given to a stability analysis of Verus’s parameters. Empirically, the authors settled upon a 5 ms epoch duration stating that this value allows Verus to quickly react to sudden short-term fluctuations. Perhaps, however, these short-term fluctuations are only transient or artifacts of interference in the channel, resulting in poor send decisions. The paper also does not address Verus’s slow start parameter tuning. We observe poor performance at the start of a Verus flow that cannot be recovered from. Future work should provide deeper analysis into Verus’s stability and address how Verus can be tuned for different cellular link conditions.

Platform

In the Verus paper, the trace-based experiments are run using the OPNET simulator. We chose to use Mahimahi, however, as our emulation platform primarily for practical reasons. First, OPNET is proprietary software that must be purchased. Second, Mahimahi has a much lower setup cost and is much easier to use. Using Mahimahi, it was relatively easy to run the desired trace-based experiments and produce the throughput/delay graphs. Third, we knew ahead of time that Sprout would be simple to integrate with Mahimahi, unlike with OPNET.

The entire setup is simple to reproduce, and the results of the setup are consistent from run to run, since we use the same fixed traces. We do note, however, that there are some considerations that may affect the reproducibility of the results including: machine performance, non-determinism in congestion control algorithms due to timing related decisions, and non-determinism in packet drop from RED which uses a random probabilistic policy. We assume machine performance is constant as we specify the AMI image/instance type. The variability from the non-determinism in the congestion control algorithms/RED queuing, however, appears to be minimal for the most part. Occasionally, however, Verus will flood the network with packets resulting in larger spikes than normal in throughput. We attribute these spikes to timeout related elements of the Verus slow start and loss recovery mechanisms.

Running our Experiment

Our code is hosted at: https://github.com/alim907/cs244-pa3

Instructions for running the experiments and collecting output, as well as documentation of the code structure can be found in the repository README.

References

- Zaki, Yasir, et al. “Adaptive Congestion Control for Unpredictable Cellular Networks.” Proceedings of the 2015 ACM Conference on Special Interest Group on Data Communication. ACM, 2015.

- Winstein, Keith, Anirudh Sivaraman, and Hari Balakrishnan. “Stochastic forecasts achieve high throughput and low delay over cellular networks.”Presented as part of the 10th USENIX Symposium on Networked Systems Design and Implementation (NSDI 13). 2013.

- Lu, Feng, et al. “CQIC: Revisiting Cross-Layer Congestion Control for Cellular Networks.” Proceedings of the 16th International Workshop on Mobile Computing Systems and Applications. ACM, 2015.

- Zou, Xuan Kelvin, et al. “Can accurate predictions improve video streaming in cellular networks?.” Proceedings of the 16th International Workshop on Mobile Computing Systems and Applications. ACM, 2015.

- Erman, Jeffrey, et al. “Over the top video: the gorilla in cellular networks.”Proceedings of the 2011 ACM SIGCOMM conference on Internet measurement conference. ACM, 2011.

- Dahlman, Erik, et al. 3G evolution: HSPA and LTE for mobile broadband. Academic press, 2010.

- Floyd, Sally, and Van Jacobson. “Random early detection gateways for congestion avoidance.” Networking, IEEE/ACM Transactions on 1.4 (1993): 397-413.

Peer evaluation: 5/5

The AMI instance provided was very easy to spin up, as described in this group’s README. Their code ran with a single command and for the expected amount of time. The resulting graphs were produced as HTML files (very helpful for viewing) in the expected directory, and overall matched those in the blog post very well. The group also provided cleanup scripts in order to start the experiment fresh if needed. Looks good, nicely done!