Team: Azar Fazel and Mark Gross

1. Goals:

In datacenters, it is not easy to guarantee a proper performance for different flows with different sizes especially for the latency-sensitive short flows when they are mixed in with lower-priority long flows. The “Deconstructing Datacenter Packet Transport” paper presents pFabric, a minimalistic datacenter fabric design that provides near-optimal performance in terms of completion time for high-priority flows and overall network utilization. In the pFabric design, switches greedily decide what packets to schedule and drop according to priorities in the packet header and do not maintain any flow state or rate estimation.

2. Motivation:

Datacenter network fabrics are required to provide high fabric utilization and effective flow prioritization, so flow prioritization is one of the important requirements for a datacenter. Specifically, datacenters are used to support large-scale web applications that are generally architected to generate a large number of short flows to exploit parallelism, and the overall response time is dependent on the latency of each of the short flows. Hence providing near-fabric latency to the short latency-sensitive flows while maintaining high fabric utilization is an important requirement in datacenters.

3. Results:

In designing the pFabric switch, the authors proved that they could decouple flow prioritization from rate control without sacrificing utilization.

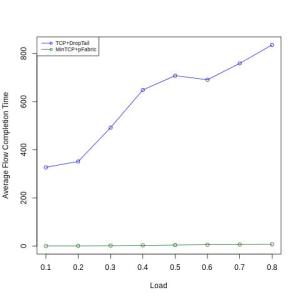

The authors have evaluated pFabric for different workloads and compared its performance with several other schemes such as TCP+Drop Tail, DCTCP, MinTCP+pFabric and lineRate+pFabric. Figure 1 shows the results for these experiments.

Fig. 1: Results reported by pFabric paper

As seen in above figure, for both workloads, minTCP+pFabric and LineRate+pFabric achieve near ideal average Flow completion Time (FCT) and significantly outperform TCP and DCTCP.

4. Subset Goals:

For the purpose of this project, we are interested in reproducing the results that the authors got for two different flow size distributions: one distribution from a cluster running large data mining jobs and the other from a datacenter supporting web search. We are going to compare the performance of an implementation that utilizes both pFabric and minTCP with that of the performance of TCP.

5. Subset Motivation:

Since TCP is the standard protocol for packet transmission, we are interested in observing its performance for latency-sensitive short flows while there is no flow prioritization in comparison with pFabric that allows small flows to have a high-priority. We want to observe the performance of a network that has eschewed the need for some form of congestion control by utilizing the priority queues as specified by the implementation of pFabric.

6. Results

Our graphs above reflect our reproductions of figure 2, which showcase the average flow completion time in comparison to the workload. In our experiments, we only seek to replicate the average flow completion times of TCP and minTCP + pFabric. Our experiments were run over a 3-layer fat-tree with four pods, with a total of 16 hosts, all links between them being 1 Gbps with an added delay of 2 us per link with packet sizes of 1500 B. This was a scale down from the setup described in the paper due to memory issues we encountered early into setting up our topology. Per our discussion with Mohammed Alizadeh, we normalized our results based on the best possible completion time for each flow size. We ran 16 simulations, 8 for each type of workload, web search and data mining, with each of those 8 representing a different percentage of total network capacity. Our minTCP + pFabric priority queue sizes were set to hold 22.5 KB (15 packets). Our TCP DropTail queues were set to hold 225 KB (150 packets). We generated both normalized and unnormalized values for comparison, but found that overall, the unnormalized values were unhelpful in our analysis, though we note that unnormalized values tend to favor minTCP+pFabric in average flow completion time (plots are generated under the simulation for normalized and unnormalized if one wishes to compare them). Under all simulations, we generated 10k flows with corresponding start times decided by a poisson distribution, following the paper’s scheme as closely as possible. For our minTCP + pFabric implementation, we had an unlimited number of priorities based on remaining flow size, which should have in theory been beneficial to workloads containing a large number of small flows. Most of the workload ends up being similar to this distribution of a disproportionately large number of small flows.

For our websearch distribution, we ran these simulations with a CDF distribution of 1 to 20000 size flows. With a large percentage of all flows tending towards the lower end of this range. (see CDF folder in cs244-pa3 for actual distributions) We found that the general scheme of the plots of our results matched the results described in the paper, mainly due to the normalization, we note that the normalization shows that our minTCP + pFabric to very close to ideal in comparison to TCP+DropTail.

Our normalization range differs from the paper’s fairly drastically, with their range of flow completion times between 0 and 10, and our flow completion times ranging between 0 and 175. This might be because our normalization scheme and scaling ended up being different from the original setup. In addition, we did some tinkering with the RTO value set in minTCP that might have affected our values.

For our datamining distribution, we get a very similar result to that of the websearch distribution, in which the simulations run with a range of flow sizes from 1 to 666667. Once again a large number of all the flows tend towards the lower end of this range. We found that the general scheme of our plots matched the results described in the paper. We note that the normalization shows our minTCP + pFabric being very close to the ideal operation. Our average flow completion times differ from the paper’s result with a range of 0 to 700 instead of 0 to 10 as described in the paper, once again because of a difference in our normalization scheme or possibly a result of the scaling involved in our topology.

7. Challenges

Conceptual Challenges:

Conceptually, the paper was lacking a few details that were necessary to the implementation, namely, the cumulative distribution function (CDF) and normalization scheme used to obtain their results. Thankfully, we were able to get into contact with Mohammad Alizadeh to discuss how the team who wrote the paper decided these values. From Mohammad we obtained CDFs representing a typical workload that one might encounter in a datacenter, a datamining workload, and a web search workload. Using these, it was possible to create a system in which a randomized selection of nodes opened up flows of varying size based on these distributions. We also had to deal with figuring out how to handle the normalization of the raw values that we got from the experiment, since the paper only mentioned that the results were, “normalized with respect to the best possible completion time for each flow size”. Since there are a variety of normalization schemes, we got into contact with Mohammad and he gave us an estimate of how the writers normalized the flow completion times (FCTs).

Implementation-wise, we ran into quite a few problems. Ns2 (which was used as the simulator in the 2012 pFabric paper) was last updated in 2011 and is quite a depreciated system. Errors and solutions varied wildly between operating systems and Ns versions, and it took quite a bit of trial and error to correctly install it. For a while we got by with a Ns package available on Ubuntu, but implementing pFabric required us to manually install Ns2. The only way to work around the problems we encountered with installing ns2 was to handle them one by one and look through the forums to find discussions on similar errors. In addition, neither the version of ns2 nor the operating system used were specified in the paper.

We decided to scale down the implementation from a 3-layer 6-pod fat-tree with 54 nodes to a 4-layer 4-pod fat-tree with 16 nodes. This was in order to account for how computationally expensive the simulation was when trying to match the original scale. This scaling in particular became an issue when we tried to set the minTCP RTO. Following the suggestion in the paper of 40 microseconds was resulting in the packets never being delivered, and necessitated allowing more time for packet delivery in minTCP, which we eventually settled on being about 40 millivolts.

Ns2 simulations turned out to be extremely expensive space-wise and computation wise. Trace and nam files for a a large number of flows often resulted in “disk out of space” errors. Because of this, we started deleting intermediate stage files as soon as they were parsed and eventually (thanks again to Mohammad), came across a means to record the flow completion times without recording the traces for every flow. This saved on disk space and the time required to parse each of the generating trace files. Despite these improvements in time, the ns2 simulations are still fairly expensive, and take hours to generate.

We ran into some issues with regards to adding priority to packets depending on the remaining flow size. Our solution to this was to leverage the ftp application which indirectly passes the flow size to the TCP flow. Separating the flow size to only apply per flow took some time.

8. Critique

From our results, pFabric provides a means by which to improve datacenter performance, particularly for distributions of flow sizes in which there are a large number of small flows and a small number of large flows. One issue we found was with the assumption made on the RTO of 40 us in minTCP. This number did not work with us and we found that flow completion times could be altered drastically when manipulated. We discovered that too small an RTO resulted in too many packet drops and ultimately made pFabric incapable of completing, so we adjusted that value to 40 ms. We would suggest that while pFabric offers an excellent prioritization scheme for small flows, it is important to remember that this prioritization scheme is still reliant on the transport protocol it is built on top of. Otherwise, we were impressed with the great performance that we got out of minTCP + pFabric in comparison to the values we got from TCP. From the results, the flow completion times for each load factor in minTCP+pFabric are near ideal as could be desired.

9. Platform

We used ns-2.35 in order to as closely replicate the experiment as possible and we ran it over Amazon EC2 on Ubuntu 12.04 LTS (GNU/Linux 3.2.16-mptcp-dctcp_ x86_64). We found this AMI in community AMIs titled cs244-mininet-mptcp-dctcp (ami-a04ac690). The instance type we used is c3.4xlarge with 30 GB disk drive. We used the c3.4xlarge with 30 GB disk drive because we needed high network performance and additional disk space to handle the large simulation size. We used this version of Ubuntu because we found it worked with the version of ns2 we were running.

The paper used a 3-layer fat-tree with 6 pods resulting in 54 hosts with 10 Gbps links with 12 us RTT delay between them. We used a 3-layer fat-tree with 4 pods resulting in 16 hosts with 1 Gbps links with 12 us RTT delay between them. Our priorities are essentially unlimited in terms of range as per the paper.

10. README

The set up requirements, the code we used and the README file are included in the below link:

https://bitbucket.org/GrossMark/cs244-pa3

11. Sources

1. M. Alizadeh, S. Yang, et al. Deconstructing Datacenter Packet Transport. In Hotnets 2012.

It was very easy to reproduce the results. I followed the instructions and got the same exact graphs as shown in the blog post. The simulation script warned me that the experiment would take up to 11 hours to run both the datamining and websearch data sets, but it only took about 3 hours on the specified EC2 instance. This is perhaps because this blog writeup says to use a c3.4xlarge instance, whereas the instructions in the readme on bitbucket say to use a c4.4xlarge. It’s also a little disconcerting that the Rscript commands at the end of the script display “null device \n 1”, but neither of these problems seemed to negatively impact the results at all. Reproducibility score: 5/5. -Kevin + Patrick

Kevin and Patrick,

First of all, thanks for your comments.

As you mentioned, the “11 hours” time that was previously displayed on the screen during the simulation run, was more a typo in the warning message. In reality, it only takes 3 hours for the simulations to conclude. We have fixed the typo in the warning message and it should now print out the correct information.

Furthermore, the message “null device \n 1” you mentioned is also a benign information message that will be printed on the screen every time our plotting script saves a jpg plot file on the system. This message just notifies the user that plot is ready and saved in a file.