by Vikas Yendluri & Alex Eckert

Original Paper

The goal of TCP Fast Open (TFO) is to reduce the latency of TCP flows by piggybacking data exchange on the TCP Handshake and cutting out an RTT. This is motivated by the fact that most web services utilize short TCP flows, and thus the handshake itself is a major source of latency. In fact, the TCP handshake accounts for between 10% and 30% of latency on services run by Google. Latency is constrained by RTT time, which is constrained by the speed of light; thus, cutting down the number of round trips is of prime importance. An alternative solution that has been proposed is to keep TCP connections alive, but this is not sufficient for 3 reasons: browsers open parallel connections to download web pages, websites shard resources across domains, and middleboxes like NATs terminate idle TCP connections. The main result of the paper is that eliminating a RTT by attaching data to the first SYN packet markedly reduces latency for short flows. This makes sense intuitively and is demonstrated in Table 1, replicated below. Table 1 shows the improvement in latency when a client fetches common webpages using TCP fast open, varying the RTT between the client and server from 20ms to 200ms. The rest of the paper is dedicated to complications that arise from changing the TCP handshake in that way––mainly security, but also NATs, backwards compatibility and implementation. Altogether, the paper shows both the benefit and deployability of Fast TCP Open. We have pasted the original paper’s results below.

Reproducing Results

Our primary goal was to reproduce Table 1. This is the heart of the paper, as it verifies the performance gains of TCP Fast Open on commonly accessed websites on the web. We were able to reproduce the results of the paper, though we found the improvement to be more significant than it was in the paper. Our results are pasted below.  In this table, amazon.com, nytimes.com, wsj.com, and tcp.com correspond to local offline copies of amazon.com, nytimes.com, wsj.com, and the TCP Wikipedia Page

In this table, amazon.com, nytimes.com, wsj.com, and tcp.com correspond to local offline copies of amazon.com, nytimes.com, wsj.com, and the TCP Wikipedia Page

Implementation

The implementation of reproducing the results was made much simpler due to the fact that Linux 3.7 implements a fast open option in the kernel (details here). These can be easily set by changing options in /proc/sys/net/ipv4/tcp_fastopen. However, in order for an application to use TFO, there are some required user–space options that need to be set on a socket, causing many simple utilities like wget to be unusable. We downloaded complete local copies of amazon.com, wsj.com, nytimes.com and the wikipedia page on TCP. These were served by a modified version of Python’s SimpleHTTPServer in order to enable TFO on the server side. In order to make client web-page requests using fast open, we used an open source project called mget, which is similar to wget but enables fastopen when the underlying operating system supports it. Mget also emulates a browser better than wget does, as it parallelizes connections when making multiple requests. To isolate performance gains to that of using TFO, we disabled caching and http-keep-alive. In order to control RTTs, we created a single-hop Mininet topology and varied the RTT on that hop between 20ms, 100ms and 200ms as done in the original paper. We time page downloads using the unix time utility (in /usr/bin/time). Our experiments run on Amazon AWS, on a modified image that is based off of Ubuntu 14.04 and linux kernel 13.13.

Platform

Kernel: Linux 3.13.0-24-generic OS: Ubuntu 14.04 Simulator: Mininet We chose Linux 3.13 because it implements TCP Fast Open in the kernel and chose Ubuntu so that we could easily apt-get install dependencies to make the setup scriptable. Mininet allowed us to deterministically vary RTT on the link in order to get meaningful results. The code should be reproducible on different OS’s, as long as they are running Linux ≥3.7.

Challenges

The first challenge we faced was in how to configure our topology to enable / disable TFO at will. Even though the linux kernel allows a user to enable TFO, most webservers and client libraries don’t use TFO by default. We got around this by configuring our own webserver and using mget. The authors used chromium instead of mget, but we preferred the mget approach since chromium adds the overhead of adding a windowing system to our test environment. Our python testing script enables and disables TFO at will by setting the value in /proc/sys/net/ipv4/tcp_fastopen. To enable TFO, we set the value to be 519 (although the common convention is 3, we used 519 for reasons described below). To disable TFO, we set the value to be 0.

However, we ran into issues in the mechanics of enabling TFO. For instance, before a client and server can use TFO, the client needs to request a TFO cookie from the server (this is used for security reasons). Thus, the first request from a client to a server doesn’t use TFO, but instead sets up this cookie so that TFO can be used on future connections. During our experiments, we found that this cookie was never being set up; as a result, no data was being piggybacked on the TCP handshake. To bypass this, we configured the TFO setting in /proc/sys/net/ipv4/tcp_fastopen to be the value 519 (versus 3, which is the conventional setting to enable TFO on both client and server). For reference, the value 519 is a bitwise AND of (0x200, 1, 2, and 4) which enables the settings described here (scroll down to the tcp_fastopen section). This allows a client and server to ignore the TFO cookie and start communicating via TFO right away. This has the same performance characteristics as normal TFO, and allows us to measure the performance benefits of TFO itself while ignoring the performance overhead of the setting up the TFO cookie. Our correspondences with the authors of the original Google paper indicated that they ignore the cookie overhead in their results as well. After doing this, we were able to verify that TFO was being used by logging packet transmissions with tcpdump and wireshark.

The last challenge was setting up completely local copies of webpages to download. This allows us to have perfect control over the link latency and RTT times through mininet, instead of playing victim to varying RTTs in the public internet. The authors of the original google paper did this with the web-page-replay tool. To simplify our implementation, we downloaded local copies of amazon.com, nytimes.com, wsj.com, and the TCP wikipedia page in Mozilla Firefox, which allowed us to download not only static html pages but also other resources (css, javascript, images), and convert all link hrefs to these resources in the html to reference the local version. We do miss some resources (for instance advertisements or other parts of the page that are loaded with AJAX). However, this is not a major issue since our results of page download times with and without tfo are both measured on this same set of pages.

Discussion & Critique

The table we produced shows significant improvement in page download times when TFO is used. In fact, we see much larger speedup than the authors of the original paper did. While these results may seem implausible, we are confident that our results represent improvement due to fast open because the only difference between the non-tfo and tfo code is a change in one kernel setting that enables/disables TFO. Furthermore, packet inspection using tcpdump and wireshark indicates that TFO is accurately being used. What is more likely is that the difference in improvement is due to the fact that we use different tools in our testing environment.

First, our results show better relative improvement between TFO and non-TFO than the original paper does. This is likely due to a difference in how requests for a page’s resources are parallelized. We used Mget, while the original authors used Chrome. Mget parallelizes connections, as does chrome, but it is unlikely that they share the same parallelization scheme. Second, our results show different absolute times for page downloads than the original paper’s results show. This is likely due to the fact that chromium is a more robust piece of software than mget, and uses more aggressive optimization techniques. In fact, we disabled some of these optimization techniques, like server caching and http-keep-alive, in order to keep results from multiple runs independent.

All in all, our results show that the thesis of the TCP Fast Open paper holds and that use of TFO improves page download time. The download of a web page is dominated by the need to download many small static resources (i.e. css, javascript) that can be downloaded in a few RTTs; for these, piggybacking data on the handshake offers huge relative performance gain. Of course, for longer flows, the performance gains are less relevant, but web page downloads feature few long flows and many short flows that can be parallelized. Thus, we find TFO to be a very effective protocol change for performance improvements. It’s also important to note that it’s quite easy to port old network software to use TFO. If one is running on a linux kernel past version 3.7, using TFO is simply a matter of passing a few extra parameters to the syscalls necessary to open a socket and write data to it.

Exploration

As a fun extension to the project we generated a table similar to table 1 from the original paper, but using the twenty most visited sites according to Alexa (pulled June 1, 2014). The results are pasted below. They confirm that use of TFO does markedly improve page download times.

Readme/Installation

Github Repo: https://github.com/vikasuy/cs244-proj3

AMI: ami-4d99ea7d (US-West, Oregon)

How to install:

There are two ways to install our test environment

- [Easiest way] Create an Ubuntu 14.04, linux kernel 3.13 EC2 instance using our AMI. This will automatically come pre-loaded with our dependencies and test scripts

- [Easy way] Create a c3.large instance on Amazon EC2 running 64-bit Ubuntu 14.04, and run the following commands

How to reproduce our results

To recreate our table 1, run the commands below:

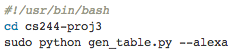

To recreate our extended results (Alexa Top 20 sites), run the commands below:

Acknowledgements

We wanted to extend our thanks to Tim Ruehsen, the author of mget, for helping us when we had questions and for making his library open source; Sivasankar Radhakrishnan, the author of the fast open paper for answer general questions about TCP fast open that we had early on; and Milad Sharif for helping us with everything else along the way.

4: We were able to reproduce results that support the general gist of TFO improving PLT. However, there seems to be a significant amount of noise in the measurements – in 10 runs, each run had at least one instance of negative percent improvement.

Regarding sensitivity analysis, it was interesting to consider TFO’s performance benefits on the broader set of web sites. However, it would have also been interesting to see experiments that explored the diminishing returns of TFO as flow size increases.

Pingback: CS244’15- TCP Fast Open | Reproducing Network Research·