Laura Garrity, Suzanne Stathatos

INTRODUCTION

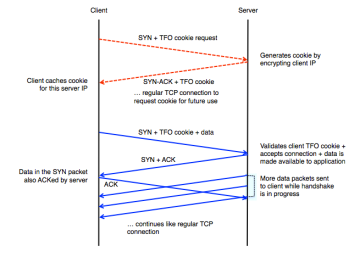

For those browsing web pages or those using the web to do work, there is always a wish that a page would load faster. For those who are familiar with the underlying protocol, they may wonder why it is necessary for the three-way handshake to take place in full before even one HTTP GET request is issued. The state of TCP prior to the TCP Fast Open paper being published was one in which a three-way handshake was necessary for the connection to be established, and only once the connection was established, would any requests for data be transmitted. The three-way handshake is illustrated below (figure 1).

Figure 1: Three-Way Handshake

Instead of waiting around for this full handshake to take place, the authors proposed not waiting for ACK on handshake but rather transferring data while the handshake was still in progress. What follows is our findings as we set out to understand their experiment and to reproduce their results.

Goals: What problem was the original paper trying to solve? As mentioned in the introduction, the authors of this paper set out to increase the speed of fetching web pages. They observed that while web pages have grown over time, networking protocols have not scaled accordingly. As a result, web transfer latency is dominated by round-trip-time (RTT). To lower transmission delay, and therefore lower RTT, they proposed not waiting for ACK on handshake but rather transferring data while handshake was still in progress. While this idea was not completely novel (T/TCP RFC 1644), the authors also set out to solve the security problems that other researchers had faced. The solution they arrived upon was introducing a cookie to verify the identity of the receiver. While the exchange of the cookie required the initial three-way handshake, the benefit came after the original contact, when a new connection was to be opened. The details of the TCP Fast Open handshake are illustrated in Figure 2. The details of the cookie, while very interesting, will not be discussed in this project, as we focused instead on the page load time gains.

Motivation: Why is the problem important/interesting? “Patience is a virtue.” A virtue that many of us are somewhat lacking. Users of the Web want content and they want it now. The Transmission Control Protocol is designed to deliver the Web’s content and operate over a variety of networking protocols and types. Often, to accelerate web browsing, web browsers open several parallel connections prior to making actual requests. However, this strategy in some situations contributes to high latency and low scalability. The authors noted that “small improvements in latency lead to noticeable increases in site visits and user satisfaction” and thus would lead to monetary gains for the makers of browsers. They found that for 33% of all HTTP requests, the browser spent a full RTT establishing connection through the three-way handshake; however, most HTTP responses fit in the initial TCP congestion window of 10 packets, doubling response time. We also found the practical application of this topic to be of interest. We are both interested in how TFO could be directly applied to increase communication/networking efficiency.

RESULTS

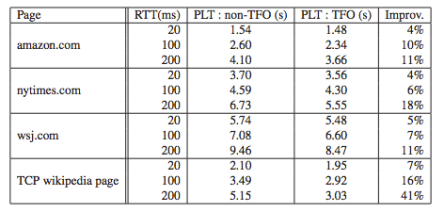

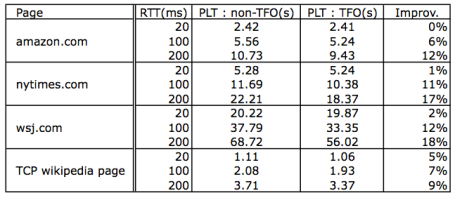

Results: What did the original authors find? The original authors used Google web page replay tool and Dummynet to benchmark the download latency for both TFO-enabled Chrome and standard Chrome. They found improvements ranging from 4%-41%, varying with RTT and type of web page served. Simpler pages had higher rates of improvement due to the dominant effect of RTT (and thus TFO speed-up). On average, though, the authors claimed that TFO reduces page load time by 10%. Their paper also addresses concerns such as dropped packets, DOS attacks, and other security limitations when using TFO, to make it easily implementable by browsers looking to improve their latency. The authors also monitored the server side for change in transaction speed. They found that TFO increased the average transaction rate from approximately 2900 transactions/second to approximately 3500 transactions/second. They attributed this increase to three factors: 1) saving an RTT for each request, 2) request being sent in SYN required less server CPU usage to process the request, and 3) one less syscall on the client-side. The authors also implemented the security cookie to validate the identity of the requestor. Since this is an added step, they examined the server CPU usage for cookie validation and found, somewhat surprisingly, that there was a range of connections per second in which CPU utilization was slightly lower when using TFO. Specifically, between 2,000 and 5,000 connections per second, TFO outperformed regular TCP (on the server side). Even outside this range (from 1,000-2,000 connections/sec and 5,000-6,000 connections/sec), CPU utilization varied minimally from regular TCP to TFO. This was attributed to the inclusion of the request within the SYN packet, which resulted in less processing for the server.

Subset Goal: What subset of results did you choose to reproduce? We chose to recreate Table 1 from this paper, which demonstrates the effect of TFO on various web pages and across various RTTs. The results of this table are as one would expect. That is, first, using TCP Fast Open decreases the time it takes to load a webpage. Additionally, the improvement increases as RTT increases.

Subset Motivation: Why that particular choice? We felt that Table 1 encompasses the main results of this paper and thus would be the most interesting to reproduce. It’s interesting to modify the behavior of this protocol when fetching real-life different web pages. We were excited by the opportunity to extend this to few different types of web pages as other research has done (TCP Initial Congestion Window).

Subset Results: How well do the results match up? Explanation if you see significant differences. Our results were more or less in line with the results from the paper. In all cases, we see that the page loads the same or faster using TCP Fast Open. In general, we see larger improvements as the RTT increases. Our absolute improvement percentages aren’t as high, which could be due to a variety of factors. In

Note that our results are averaged across 10 runs for each page load time. Due to time constraints on the part of the reader, we culled our scripts to run only once and leave it as an exercise to the reader should they want to average (we used numpy). We found that on occasion, TFO showed no improvement (or was a smidgen slower). As a result, we feel that it is best to average across multiple runs.

ANALYSIS

Challenges: What challenges did you face in implementation? We had a few clarifying questions to ask the authors, which they graciously answered for us. We wanted to confirm that the results presented in Table 1 didn’t involve the cookie exchange. They confirmed that they did not, and thus we were able to ignore the cookie entirely. The reason for this is due to the fact that the cookie exchange takes places in the first three-way handshake (see Figure 2). This table was created once the cookie was already cached, meaning that the only effect the cookie would have had would have been the decryption on the side of the server to ensure the client was who they were claiming to be. Since this time is negligible, we ignored it for the case of these reproductions.

In our communication with the researchers, they suggested that we use the same tools (Google webpage replay and dummynet) that they used during their research to enable and observe TCP Fast Open. However, presumably due to updates, bug reports, and differences in the code between 2010 and now, we struggled to get chrome-replay-tool working. We found a few fixes online to several of the python scripts, which enabled us to get the basic recording functions working; however, we couldn’t get the program to run in replay mode successfully. We would see a lot of misses and the program would hang. We then tried to get dummynet to work with chrome-replay-tool, but ran into errors there as well. As a result, we switched back to our initial idea of using Mininet.

It was a challenge to get our internal “server” on Mininet working (for more details see Setup section below). We first started by grabbing only the .html which was simple and easy. However, we next wanted to get the full web page (including css/javascript/etc). We first tried wget -r, but found that it was pulling a lot of extraneous information. Eventually we settled on some flags to pass to wget (-E -H -k -K -p) to pull everything locally. The biggest challenge as we were reproducing these results was getting TFO to work. It also took us a few tries to get the tfo_fast_open flag working on our image but we managed after a couple of tries to finally get echo “3” > /proc/sys/net/ipv4/tcp_fastopen to effectively set the flag (we also could have done it with a sysctl call). To test if TFO would work without Mininet (and without controlling RTT’s), we ran a request from one Amazon EC2 image instance to the Internet, and we found a significant improvement in PLT. Support for TCP Fast Open was added in Linux 3.6, so we upgraded our system to Linux 3.14. We choose a more recent version to hopefully reap the benefits of small fixes that were made in the first few reversions (and at the suggestion of one of the original paper authors, Yuchung Cheng).

Critique: Critique on the main thesis that you decided to explore – does the thesis hold well, has it been outdated, does it depend on specific assumptions not elucidated in the original paper? The main thesis we decided to explore is that using TCP Fast Open improves page load time. While we were able to replicate the table, we were not able to do so easily and eventually resulted in using a system with TCP Fast Open enabled by default. We were also able to replicate the table by modifying the system’s kernel settings outside of mininet and fetching pages from the Internet–though in these cases, we had no control over the RTT, congestion, or other network conditions. Therefore, we found that fast open did, in the majority of cases, speed up our transfer time. This thesis still holds, as the simple fact is that TCP Fast Open starts sending data faster than when TCP Fast Open is not implemented. One source of variance that was impossible to account for is the change in the web pages from when the paper was released in 2011 to when we tried to replicate the results in 2014. These websites have clearly changed over the years and this could certainly affect results (for instance, if a site has incorporated more javascript or has more content that must be sent back and forth). As a result of this, we provided a copy of these sites in our git repo for those wishing to replicate results.

Another piece of information that would have been very useful to reproduce the original paper results is the google-webpage-replay archives from the tests that they ran.If these were available, we could have tried running them through the tool in replay mode and been able to see exactly what they fetched and how it behaved with and without TFO.

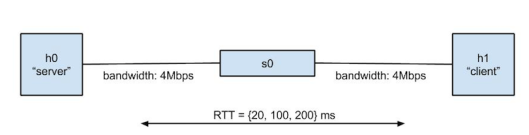

SETUP

As mentioned above, we chose to recreate these results by using Mininet. We created two hosts and internally “hosted” the web pages on one of these hosts, as illustrated in Figure 3. We used the Python HTTP Server (similar to what we had used in Project 1) and implemented a FastOpenServer to initialize TCP Fast Open (using a setsockopt call). By doing this, we were able to control the network conditions, specifically we were able to set the RTT. We loaded all of the web pages onto our “server” first to ensure that we were always using the same pages (so that things such as ads didn’t update and affect our results).

We used mget to fetch the web pages from the server as we scanned some documentation that indicated issues with wget and TFO. It is possible that we could have gotten it working, but chose not to spend time on that as scanning mget source code makes it clear that it supports TFO. We followed the Linux documentation that detailed how to implement TCP Fast Open from both a client and a server side. According to it, TCP Fast Open could be manually configured by changing a flag in /proc/sys/net/ipv4/tcp_fastopen. We used chose to set the flag to 519 (0x200 OR 0x1 OR 0x2 OR 0x4 ) which enabled us to run TFO on both the server and client side (without cookies). We did some experimentation with these flags, tried many different combinations, and examined tcpdumps to ensure that the handshake was, in fact, modified. Due to the fact that we could not change TCP Fast Open settings while in Mininet and that we wanted to be able to specify the locally-hosted pages and RTTs, we approached a different route to use two separate operating systems–one with TCP Fast Open enabled and one without. Ubuntu 14.04 is documented to have TCP Fast Open enabled by default. TCP Fast open was supported by both Firefox and Chrome and was “turned on by default in version 3.13 of the Linux kernel — the version used by Ubuntu Server 14.04”. When we ran our code with tcpdump to capture the packets, data was being sent with the original SYN packet, so we could confirm TFO was working. As listed in the table above, too, TFO speeds up fetching the webpage from between 1 and 67 percent.

Platform: Choice of your platform, why that particular choice and how reproducible is the entire setup, what parameters of the setup you think will affect the reproducibility the most? We chose to use Mininet for this experiment for several reasons. The first reason being that we felt most comfortable in Mininet, since we had both used it in CS144 as well as for other CS244 assignments. The second reason was that we invested a good deal of time in google-webpage-replay-tool and didn’t have much luck. The third reason was Mininet has a good API and allowed us to do most of the things we wanted to do quickly and relatively painlessly. The entire setup is very reproducible (steps to reproduce follow this section!). There is a good deal of setup required to update kernels and potentially OS. As mentioned above, we struggled with getting TFO to work. Everything seemed to be in place, but until we did the last update to Ubuntu 14.04, we were not able to see TFO on our packet captures. As such, we would recommend using the images that we publicized, if at all possible.

README: Instructions on setting up and running the experiment.

Steps to Reproduce:

1. Create two t1.micro instances, one for TFO and one for non-TFO

- For TFO: use AMI cs244-tfo-sg in US-West (Oregon) / AMI ID: ami-3f4f3cof

- For non-TFO: use AMI cs244-no-tfo-sg in US-West (Oregon) / AMI ID: ami-a54e3d95

2. Fire up both instances (username: ubuntu) and clone the git repository:

git clone https://github.com/lgarrity/cs244-pa3

3. Change into the cs244-pa3 directory and run the script.

- For the TFO instance, type sudo ./run-tfo.sh

- For the non-TFO instance, type sudo ./run-no-tfo.sh

The results for each will print out at the end of the run (each run lasts approximately 5 minutes). If, at any point, you forget which instance is which, a quick way to identify is by typing uname -a at the prompt, the TFO instance is running Linux 3.13 and the non-TFO instance is running Linux 3.7)

NB: If you need to stop in the middle, ensure you cleanup Mininet before running again (sudo mn -c).

NB: If you are trying to reproduce without the AMI, you’ll need to ensure that you have the following installed:

- Ubuntu 14.04

- Linux kernel 3.14

- mget (with dependencies) (available here: https://github.com/rockdaboot/mget)

Sources

- Radhakrishnan, Cheng, Chu, Jain, and Raghavan. “TCP Fast Open.” Proceedings of the 7th International Conference on emerging Networking EXperiments and Technologies (CoNEXT), ACM (2011).

- Radhakrishnan, Cheng, Chu, and Jain. “TCP Fast Open.” IETF Internet Draft (2014). http://tools.ietf.org/pdf/draft-ietf-tcpm-fastopen-08.pdf

Hi,

Nice project. Two suggestions: (1) It would have been nice to have been able to run the experiment on just one machine so that I could a result that looks more like your table – it’s kind of annoying to have my output on two different terminals and without any obvious way to determine percentage improvement; (2) I would have liked to see more than 1 trial done on each run, even if it’s easy for the reader to implement, if your table shows data from 10 trials, I think the default script should have as well.

Other than that, very well written report, easy to reproduce results, matches well with what was expected. Well done.

-Stephen

Pingback: CS244’15- TCP Fast Open | Reproducing Network Research·

Pingback: CS244 ’16: TCP Fast Open | Reproducing Network Research·